Survey drafting tips for response quality

Effective survey design serves as your primary design for response quality by maintaining respondent engagement while building in safeguards that help filter out less reliable participants.

These eight evidence-based tips are drawn from insights across thousands of research projects on the Conjointly platform, helping you build quality controls into any survey regardless of your sample source.

1. Include open-ended questions

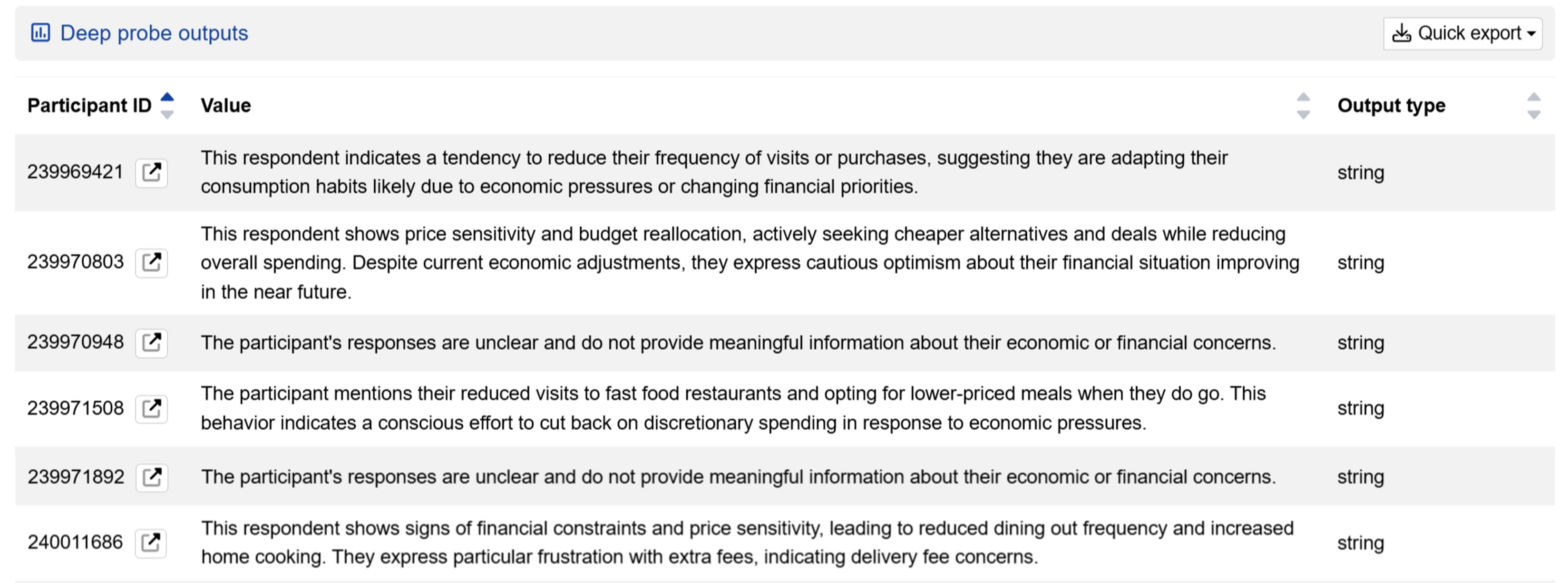

Open-ended questions serve as effective engagement indicators as they require participants to provide thoughtful answers rather than simply clicking through options. Conjointly offers automated quality checks to help you easily identify sloppy open-ended responses.

Even if your focus is primarily quantitative, include at least one open-ended question in your survey. You can analyse these responses easily using Deep probe.

Here are some additional recommendations to help you maximise the effectiveness of open-ended questions:

- Avoid questions where you expect similar answers like “What brand do you prefer?”, as they don’t reflect engagement levels.

- Ask questions that provide research value. For example, “Even if you wouldn’t use this product yourself, what type of person do you think would benefit most from it?”.

- Set minimum character requirements, such as 10 characters to prevent minimal responses like “n/a” or “no”.

2. Randomise questions and options

Question and answer order can influence responses through order bias, where respondents favour early options or become influenced by previous questions. Enable randomisation, flipping, or rotation features in your surveys and group questions into randomisation blocks wherever the logical flow allows.

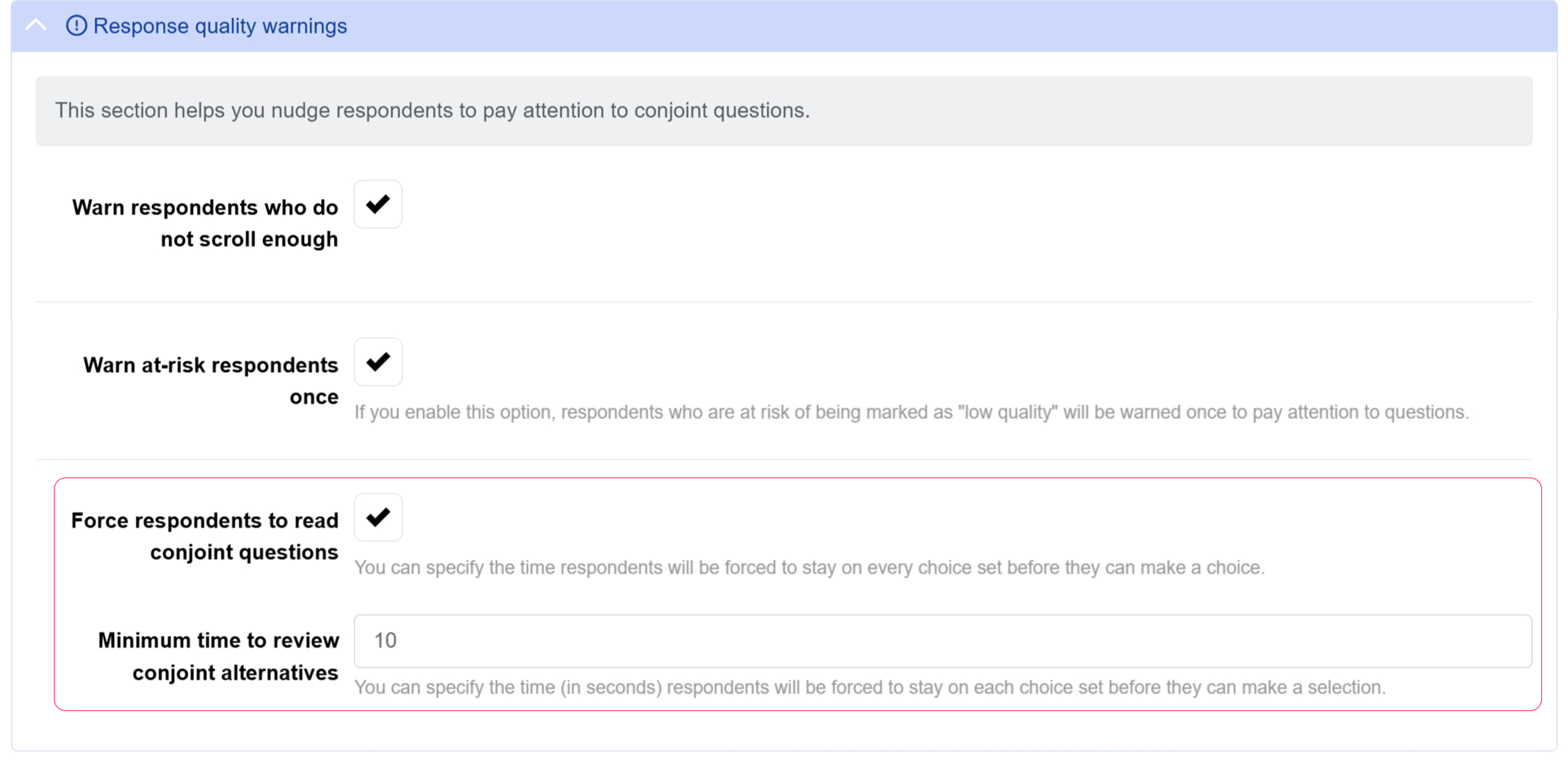

3. Set minimum response times for conjoint questions

Complex choice tasks like conjoint questions require careful consideration of trade-offs, yet even diligent respondents may be tempted to rush through them. Setting a minimum time to review conjoint alternatives of 5-10 seconds depending on complexity encourages more thoughtful responses without interfering with other quality detection methods like mouse tracking and scrolling behaviour.

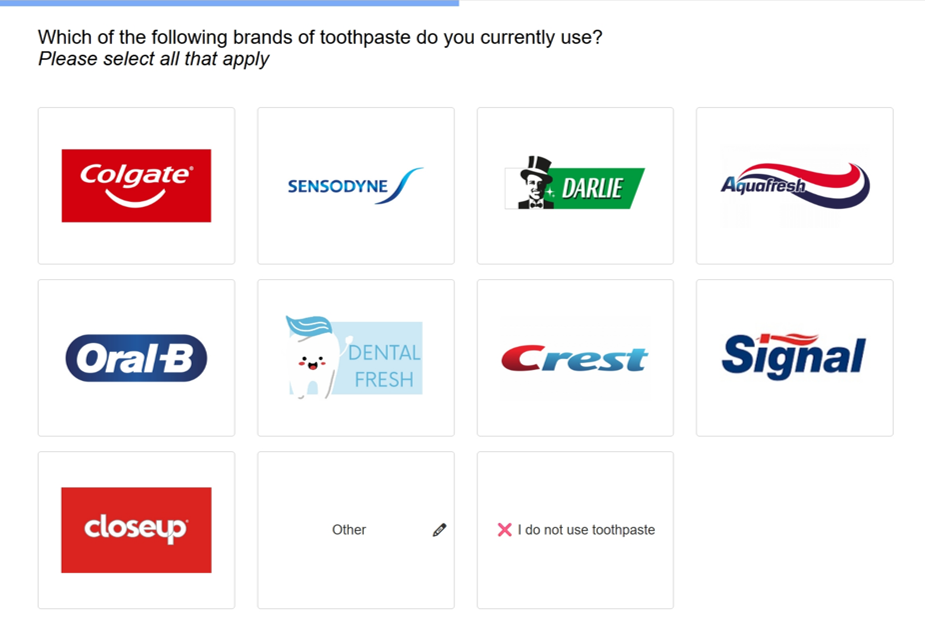

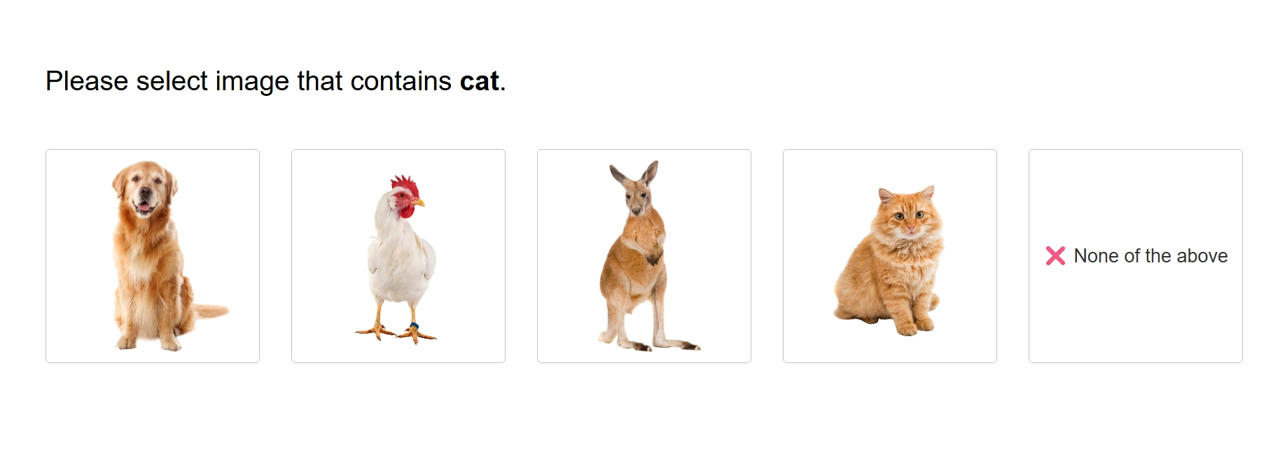

4. Add trap options strategically

Trap options like fictional brands or products help identify respondents who overclaim usage or rush through surveys without reading carefully. You can include these options in your usage questions. For example, mix “Dental Fresh” among actual toothpaste brands like Colgate and Sensodyne.

However, avoid using them in awareness questions, as genuine respondents might confuse fictional options with real brands they vaguely remember. In addition, ensure trap options sound realistic but don’t actually exist or resemble existing brands too closely, where respondents could make innocent mistakes. The goal is detecting overclaiming, not tricking participants.

5. Test market knowledge

Ask respondents about concrete details they should know if they genuinely use a product or service. Price questions work particularly well, as real users typically know what they pay for regularly purchased items and services. You can also ask about wait times, locations, or specific product features.

Use open-ended or numerical input questions rather than multiple choice to prevent guessing. Remember to set realistic tolerance ranges when evaluating responses.

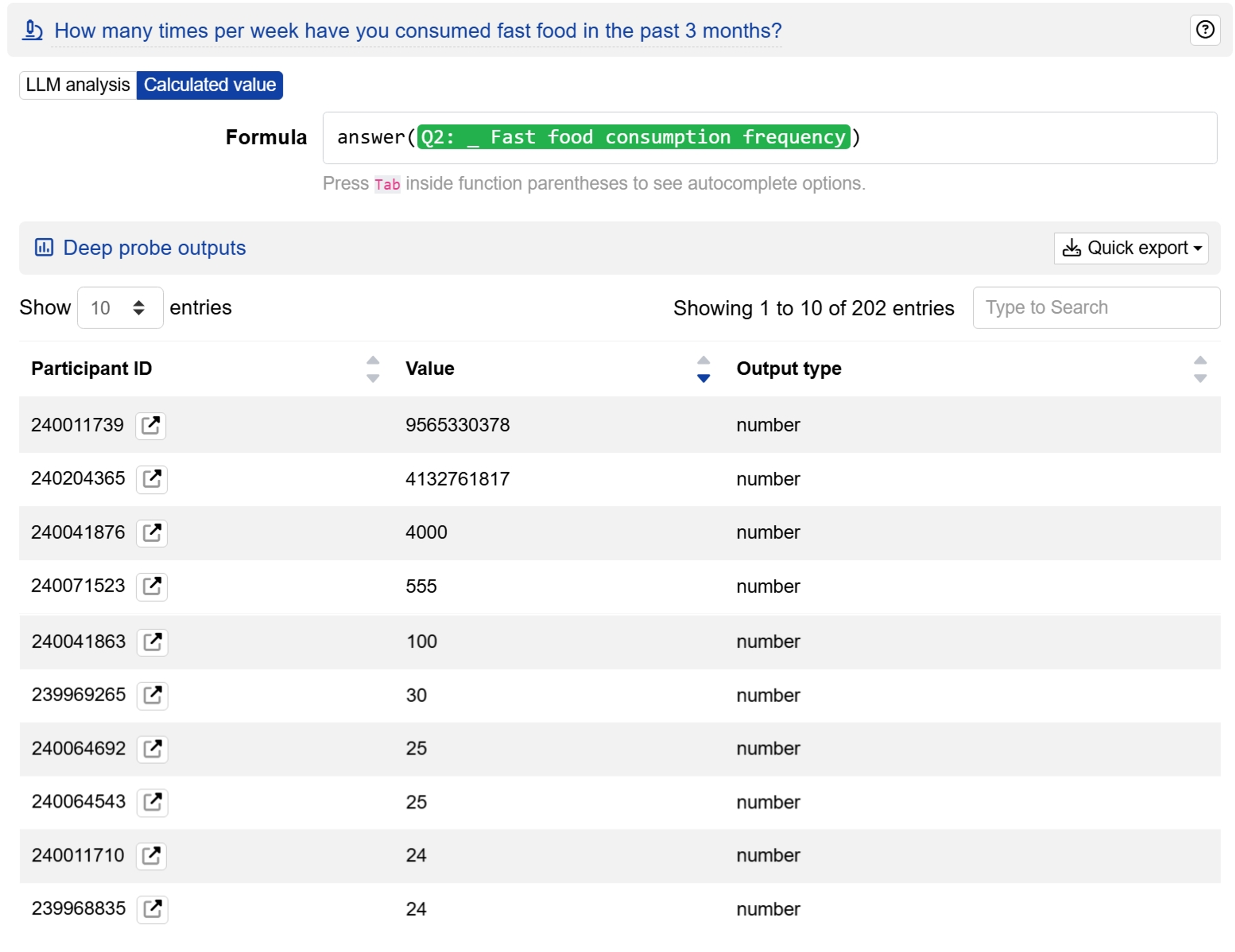

6. Include purchase behaviour questions

Volume and frequency questions help reveal unrealistic claiming patterns, such as respondents claiming to buy excessive quantities or shop far more frequently than typical users. Beyond identifying unreliable responses, these questions also provide valuable segmentation data and help contexualise pricing studies by prompting respondents to consider their actual spending patterns.

Like market knowledge questions, use open-ended or numerical input rather than multiple choice to prevent guessing. Account for outliers when evaluating responses as some people have genuinely different purchasing behaviours that may appear unusual but are legitimate.

7. Avoid consistency traps

Don’t add questions designed to catch inconsistent responses, whether about opinions or facts.

Opinion inconsistencies often reflect genuinely changing or uncertain views, which represent valuable research data. For instance, when a respondent rates a product differently across various question formats, this may be because their opinion is still forming or depends on context. This uncertainty is worth capturing, not eliminating.

On the other hand, factual inconsistencies usually result from normal human errors like misclicks or typos rather than indicating poor overall response quality. Excluding respondents for single factual errors can bias your sample while missing important perspectives.

8. Avoid attention checks

Don’t include instructional questions like “Please select Option 3” or basic knowledge tests like “The Earth is flat. True or False?” in your surveys. These attention checks have no proven benefits for research quality but may signal suspicion rather than respect, which can negatively impact engagement and response quality as respondents feel like they’re being tested or distrusted.

These eight techniques help you build quality controls directly into your survey design, improving response reliability from the start. For more detailed survey construction guidance, check out these detailed survey scripting best practices covering a wide range of question types and research goals.

Next steps

Once your survey design is ready, you can proceed with choosing participants and begin your data collection. Conjointly’s quality assurance processes provide multiple protection layers throughout your research project to ensure reliable results.

Do you have any questions? Get in touch with a market research expert today. Schedule a consultation. You can also read about: