Quality assurance for Self-serve sample

Self-serve sample provides complete control over respondent targeting and data collection. It is ideal for those who want urgent data collection and a hands-on approach.

Conjointly has achieved ISO 20252:2019 certification for its sampling services (Annex A), confirming that both Predefined panels and Self-serve sample meet international standards for rigour, transparency and ethical conduct.

This guide builds on the foundation established on how to get started with Self-serve sample and covers the quality assurance options available to you.

Automated quality checks

When you purchase responses through Self-serve sample, Conjointly defaults to automated checks to identify fraud and inattentiveness during the survey. Respondents are automatically marked as low quality if any of the following signs are detected:

- Avoidance of network detections (when respondents deliberately block our network detection systems, indicating potential intent to circumvent our quality control measures).

- Quick completion of the full survey (e.g. within 20 seconds).

- Rapid selection of conjoint choice sets (e.g. within 1 second).

- Presence of a mouse on the device, but no mouse movement is detected.

- Lack of scrolling when it’s needed to read the full question.

- Submission of unusual answers in open-ended questions.

- Any violation against your enabled response quality management options.

Additionally, the system will automatically remove participants with 70% duplicated open-ended answers.

Additional quality control options

The Quality Checker gives you real-time visibility into your response quality during data collection, helping you optimise your survey performance and data reliability whenever you want.

Checking flagged responses

When responses are automatically flagged as low quality, each comes with a specific reason tag. You can use this information to spot patterns and identify potential improvements to your survey setup.

While high volumes of flagged responses are uncommon, when they occur, typical underlying factors include:

- Survey design elements like question clarity or instruction flow.

- Targeting criteria that may not match your intended respondent profile.

- Technical factors such as display or navigation issues.

You have the flexibility to pause data collection to make adjustments, or continue collecting while monitoring exclusion rates to ensure they stay within acceptable bounds for your target sample size.

Reviewing included responses

Beyond the automated checks, you can choose to manually review responses that passed initial screening. This gives you an extra layer of quality control tailored to your specific research requirements.

Areas you might examine include:

- Response consistency across related questions.

- Open-ended response quality and relevance.

- Alignment between responses and your screening criteria.

- Response patterns that could indicate systematic issues.

This additional review process can provide deeper insights into your data quality and inform adjustments to survey design or targeting for current or future studies.

Preview and pilot test your survey before full launch

Preview your survey as a respondent to ensure functionality and user experience work as intended, then launch with a small pilot with 10 to 50 responses. This helps identify potential issues early and allows you to refine targeting or survey elements based on initial response patterns.

Managing response quality during data collection and reconciliation period

Conjointly recommends you to monitor your response quality using Conjointly’s Quality Checker throughout data collection to identify and address issues early.

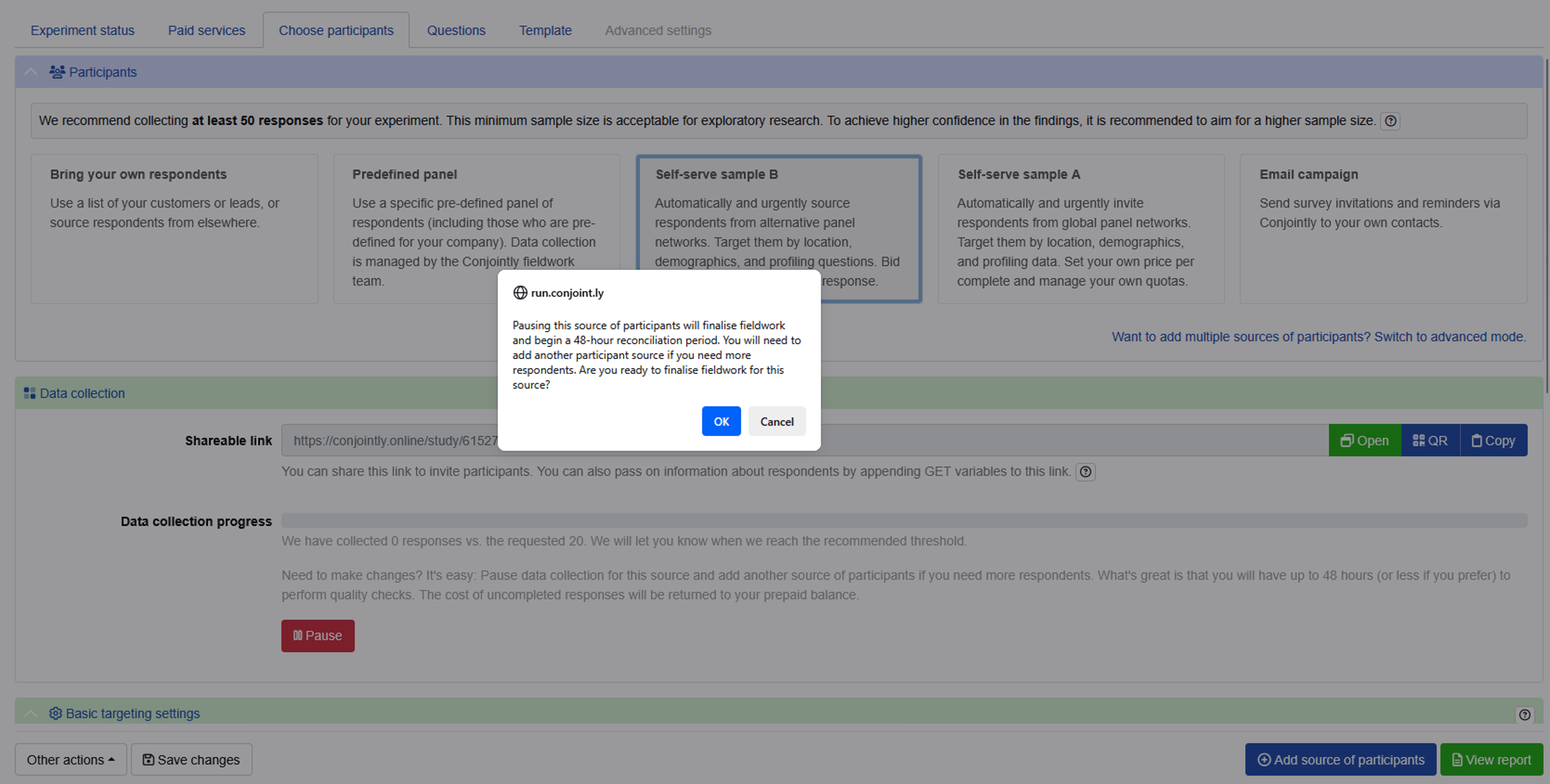

With Self-serve sample, you can pause the data collection when you need to make any necessary adjustment. Once the data collection is paused, it cannot be reactivated or continued.

The 48-hour reconciliation period automatically begins when your data collection is completed or paused. You will receive an email notification from Conjointly confirming the start of the reconciliation period.

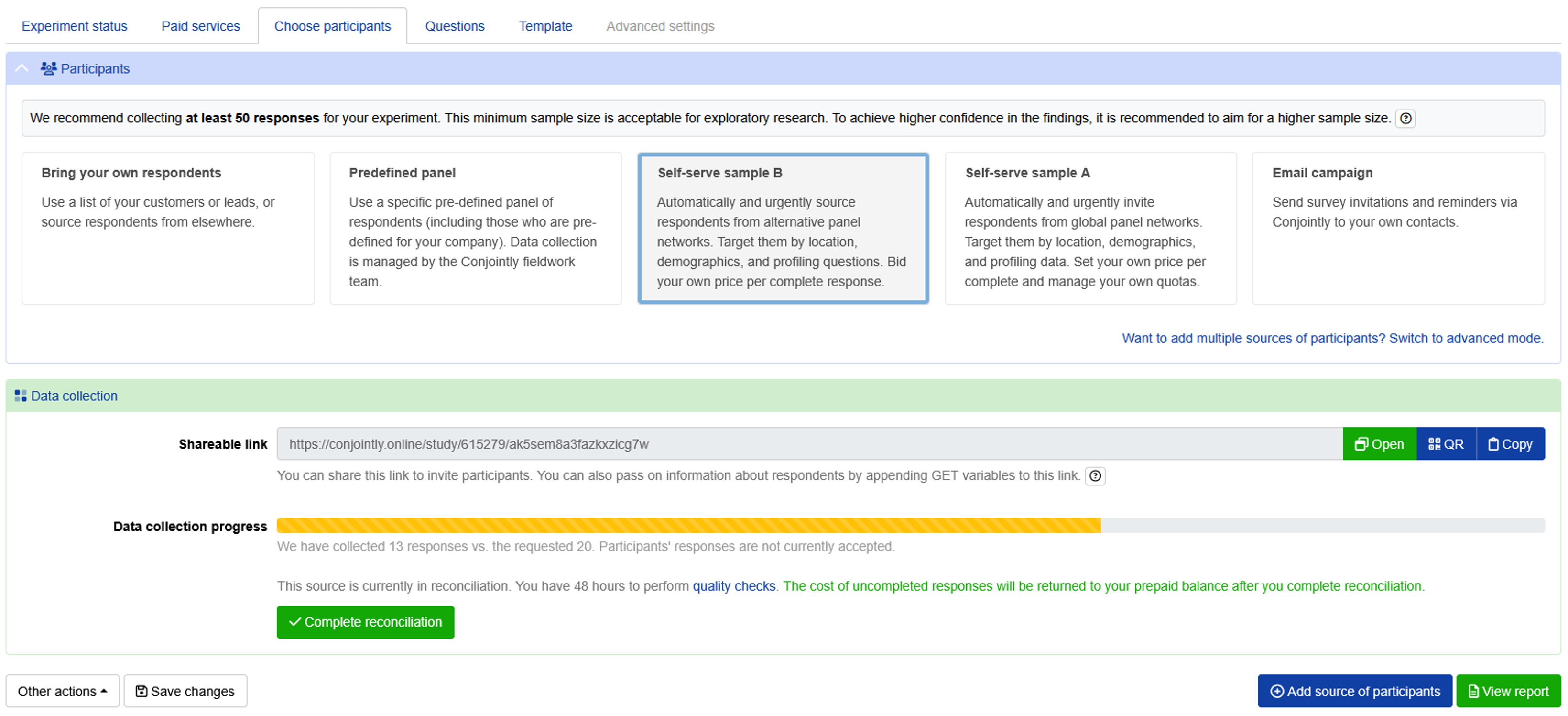

During this period, you can perform quality checks on collected responses.

At the conclusion of the 48-hour period, or if you choose to complete reconciliation early, you can click Complete reconciliation.

Once reconciliation is completed, respondents cannot be included or excluded from analysis, and your proforma invoice will convert to final billing. Credits for any incomplete responses are automatically returned to your prepaid balance.

You can use the credits freed up from excluding low-quality responses to launch a new data collection, ensuring you receive the required number of responses for your research.

If you require highly specific sample criteria or prefer professionally hand-checked responses, Conjointly’s Predefined panels offer comprehensive quality assurance with dedicated research team oversight.