AI summary of open-ended text responses

With Conjointly’s AI summary of open-ended text responses functionality, you can automatically obtain high-level summaries and sentiment analyses for all open-ended questions in your reports.

From USD 0.15 per respondent, the function summarises open-ended responses to the following questions, including:

- Short text questions.

- Paragraph input questions.

- Positive/negative open-ended feedback questions.

- The ‘Other’ option for multiple choice and dropdown menu questions.

- Comments for highlighters in text highlighter and image heatmap questions.

- Open-ended diagnostics for Claims Test, Product Variant Selector, and Brand-Price Trade-Off.

How to enable AI summary of open-ended text responses?

Here are two intuitive ways to enable AI summary of open-ended text responses in your experiment reports.

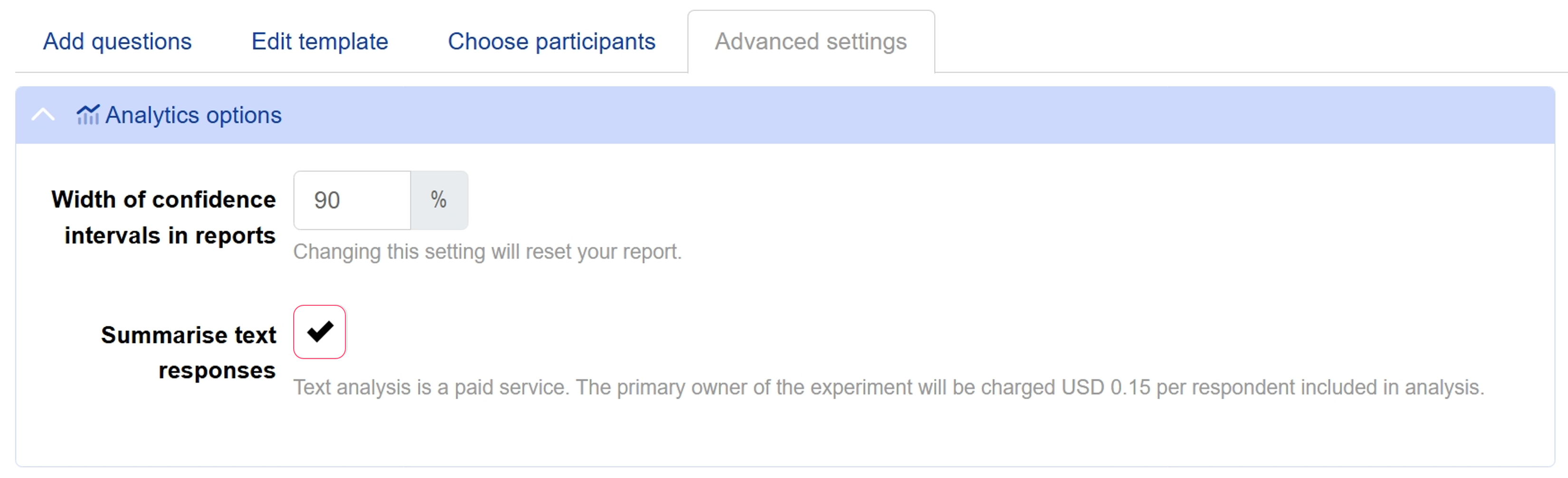

Method 1: Via Experiment Settings

Navigate to the Analytics options tabs under Advanced Settings of your experiment, and click on the checkbox next to Summarise text responses .

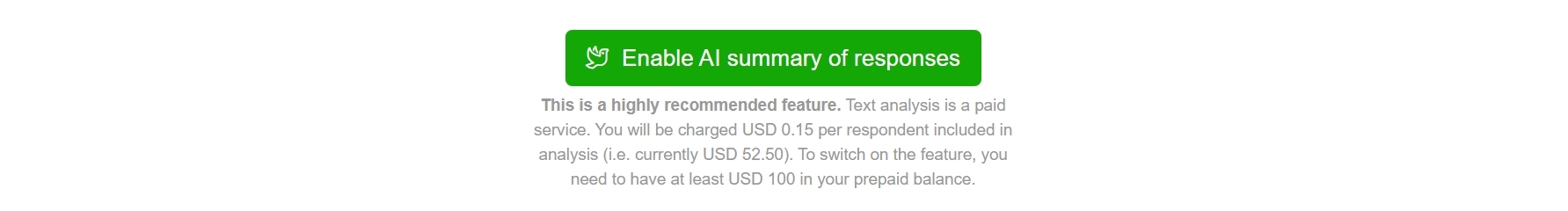

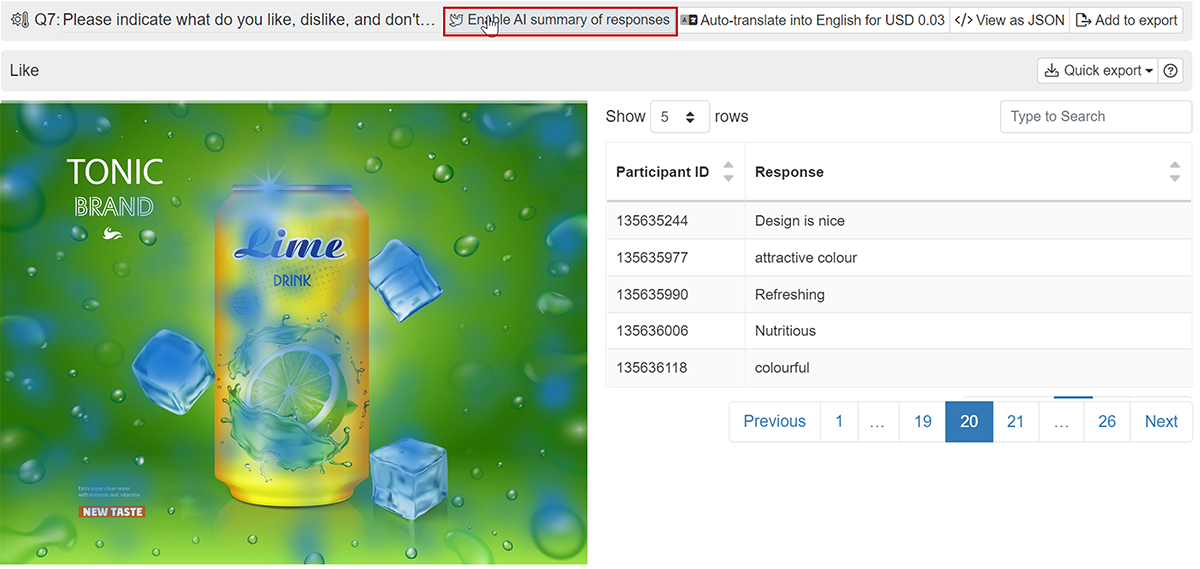

Method 2: Via Report Dashboard

When viewing reports from experiments without AI summary enabled, the button will appear on applicable sections and questions. Click the button to activate the function.

For comments in text highlighter and image heatmap questions, the button is located on the question tab.

If you are the primary owner of the experiment, you will be prompted to set up auto top-up if you haven’t already, and you must maintain a minimum of USD 100 in prepaid balance to enable AI summaries of open-ended text responses. Once enabled, your prepayment balance will be charged USD 0.15 per respondent included in analysis.

If you are not the primary owner of the experiment, you can still enable the feature, but the charges will be applied to the primary owner’s prepaid balance.

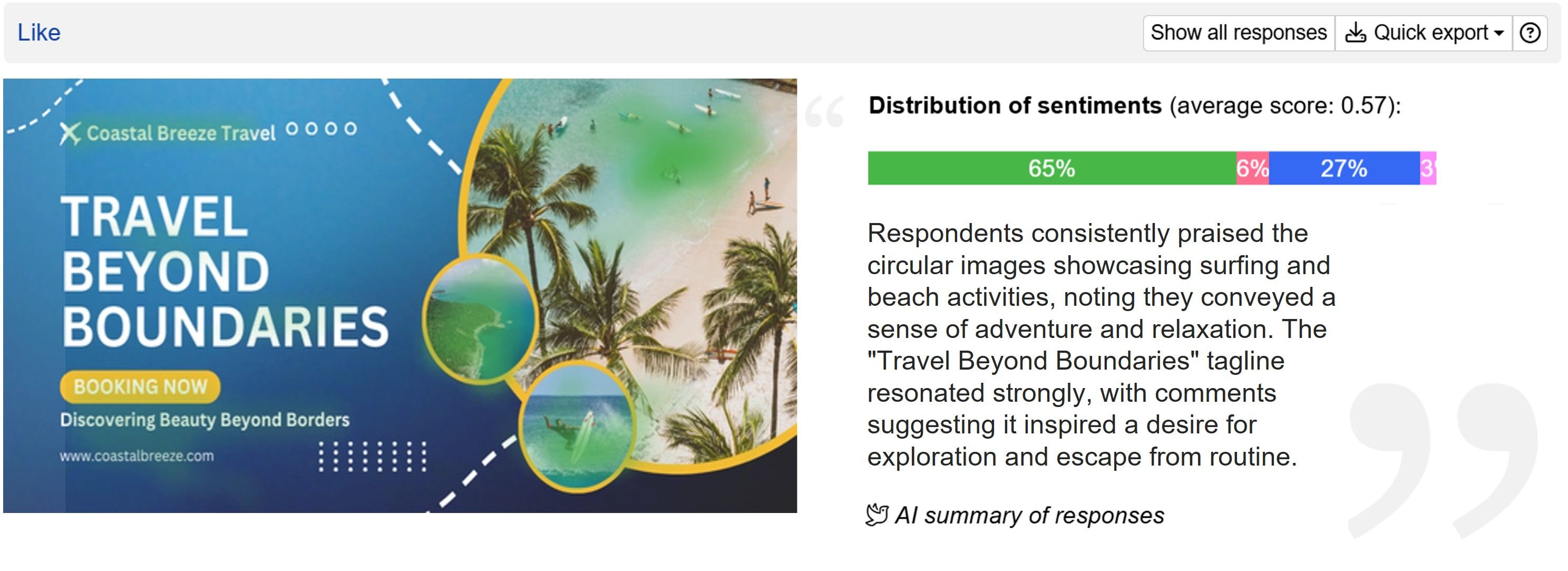

Sentiment analysis

Sentiment analysis helps you analyse respondents’ text responses to determine whether the dominant emotion is positive, negative, mixed, or neutral. The analysis is automatically included when performing an AI summary of text responses, and there are no additional charges for this functionality.

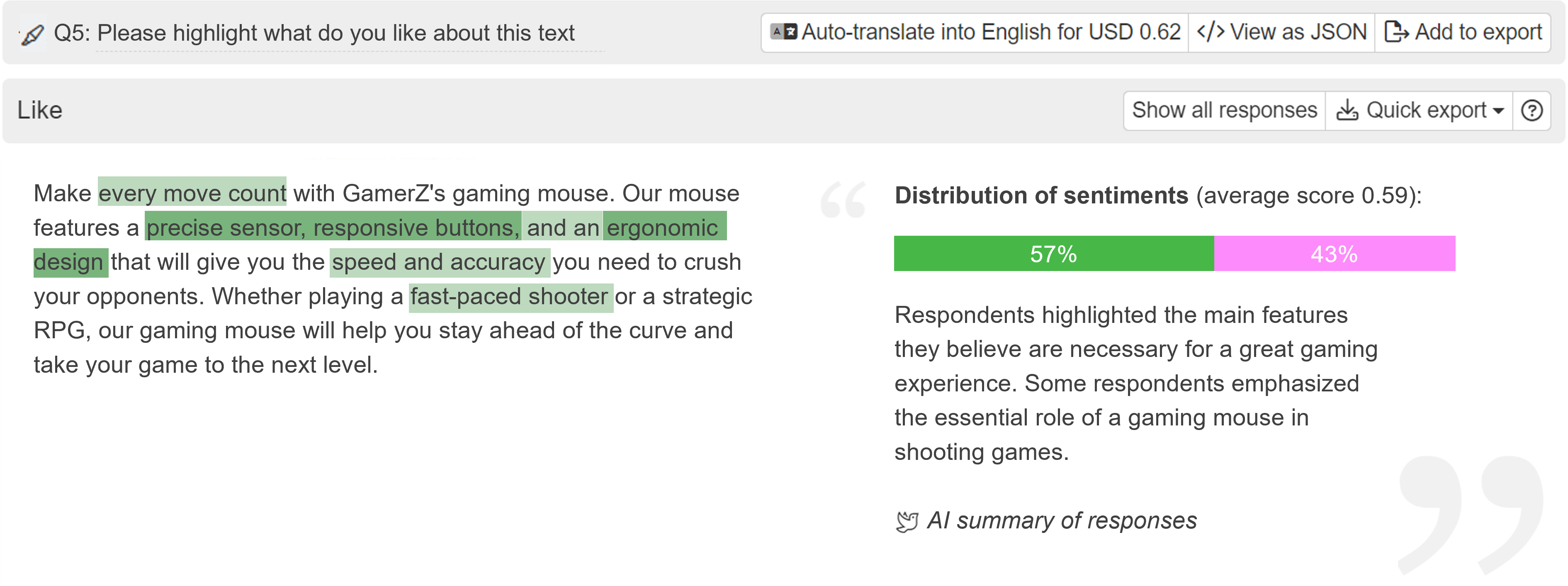

Interpreting sentiment analysis results for individual questions

For every open-ended question, the sentiment analysis outputs show the distribution of sentiments, which includes:

- The average sentiment score of responses, ranges from the most negative (-1) to the most positive (1).

- A coloured bar chart showing the percentage of responses by sentiment category, with green for positive sentiment, pink for negative sentiment, blue for neutral sentiment, and violet for the mixed sentiment.

The following information is also displayed for individual responses to short text, paragraph input, positive/negative open-ended feedback, and the ‘Other’ option for multiple choice and dropdown menu questions:

- The prevailing sentiment determined by the natural language processing model

- The sentiment score, ranges from -1 (indicating a 100% likelihood that the response has a negative sentiment) to 1 (indicating a 100% likelihood that the response has positive sentiment).

You can also filter the responses by sentiment using the dropdown menu.

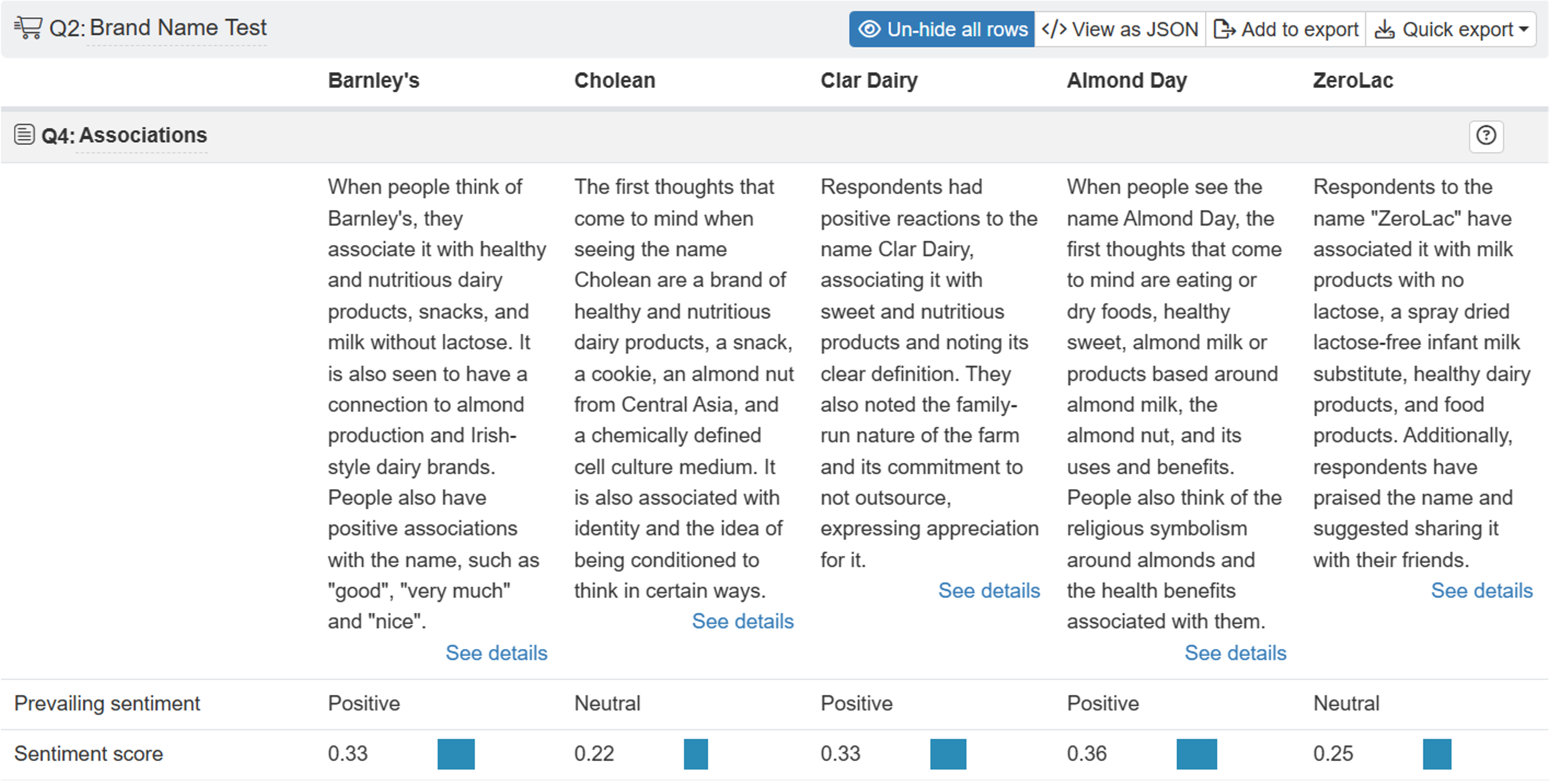

Interpreting sentiment analysis results for monadic blocks

For monadic blocks, the prevailing sentiment and the sentiment score are displayed for each stimulus in open-ended questions.

Using sentiment analysis results for segmentation

Follow these steps to create segmentation in your report using sentiment analysis results.

- In your report, navigate to Segmentation .

- Click on .

- On the pop-up, select .

- Specify the question, item (only if applicable), operator, and sentiment from the dropdown menus.

FAQs

How do AI summaries of open-ended text responses work?

The AI summaries of open-ended text responses are powered by large language models operated by our subprocessors such as OpenAI and Anthropic. All responses are preprocessed to remove answers that do not contain valuable information, such as “Nothing”, “N/A”, and empty answers. Then, the AI-based model processes and summarises the content.

While large language models are a great help in working on natural language tasks (that would otherwise require manual human work), it is not always clear exactly why the model provides a certain output. By design, AI’s exact way of “thinking” is not always clear and is a deep research field.

Conjointly encourages treating AI summaries as high-level summaries that take into consideration all text inputs with valuable information.