Coding open-ended responses

Extracting themes and insights from open-ended responses typically involves reading through responses, translating multilingual content, interpreting meanings, and categorising findings manually. With Deep probe you can transform your open-ended responses into structured data within minutes, saving you time and minimising human error.

The example below shows you how Deep probe’s LLM analysis converts open-ended feedback into clear categories.

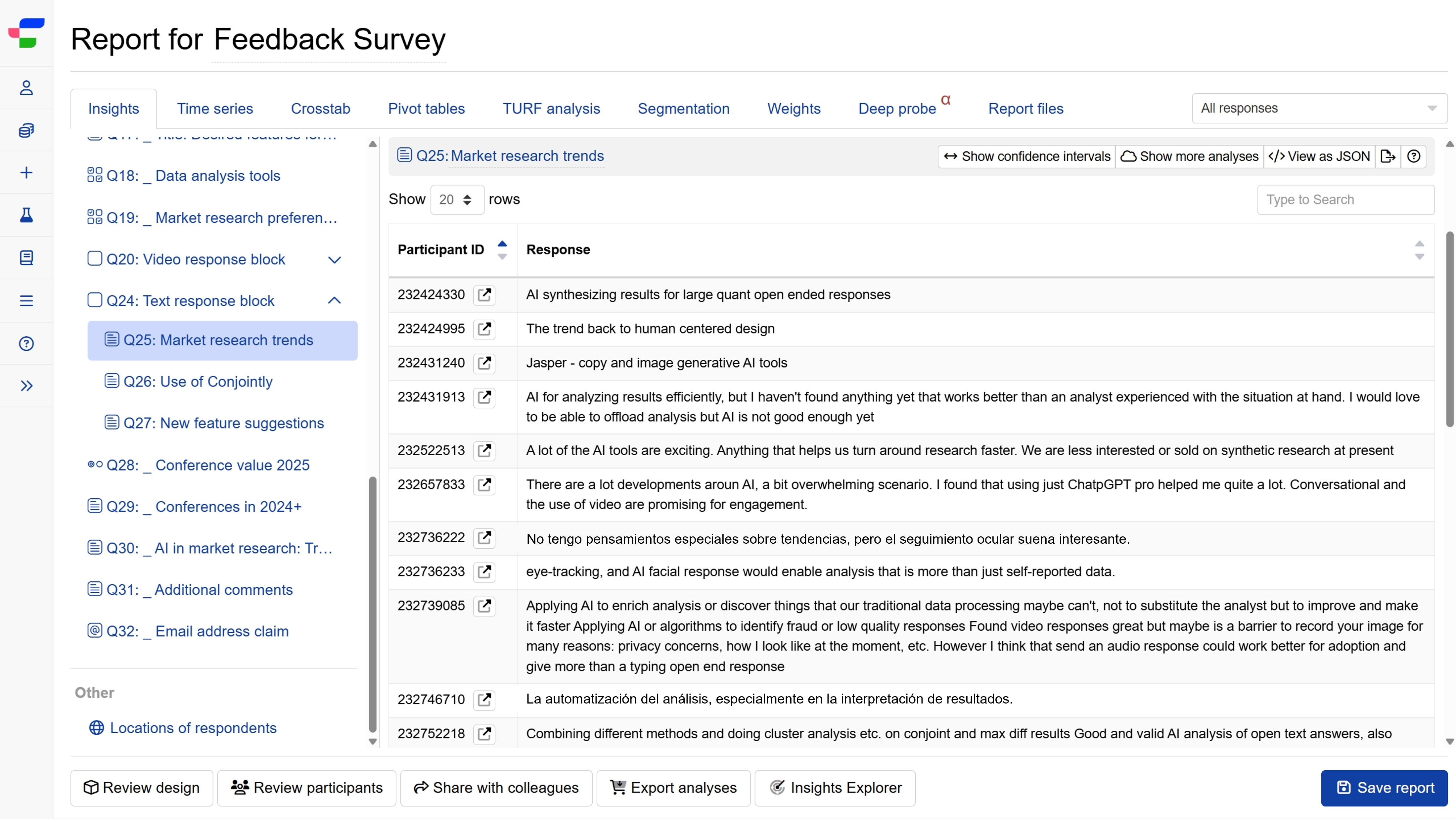

Working example: Categorising market research trend insights

A market research company collected open-ended feedback from their global user-base about trends in the market research industry. The survey contained multiple open-ended questions, with responses in multiple languages. The researchers needed to quickly categorise these responses into themes for analysis.

To save time from translating and coding individual respondent answers, a deep probe was added to identify and group responses by topic regardless of language or response length.

Deep probe setup

- Navigate to the Deep probe tab in the experiment report and click .

- Select .

- Insert the analysis request. For example,

Code open ends into several topics - The system suggests categories as output type with options such as “emerging technologies”, “AI applications”, “research methodologies”, “data analysis techniques”, “industry trends”, “challenges and concerns”, etc.

- After reviewing the suggested categories, researchers can accept the recommendations or modify them based on their analysis objectives. In this example, the researcher accepted all suggested categories.

Generated prompt for analysis

The system generates an analysis prompt based on the settings. Final output quality depends on this prompt and LLM processing. Refine the prompt and preview results to ensure it captures what you want to discover before processing begins.

The following shows an example prompt generated by the LLM based on the request in this example.

Request: Code open ends into several topics

Response:

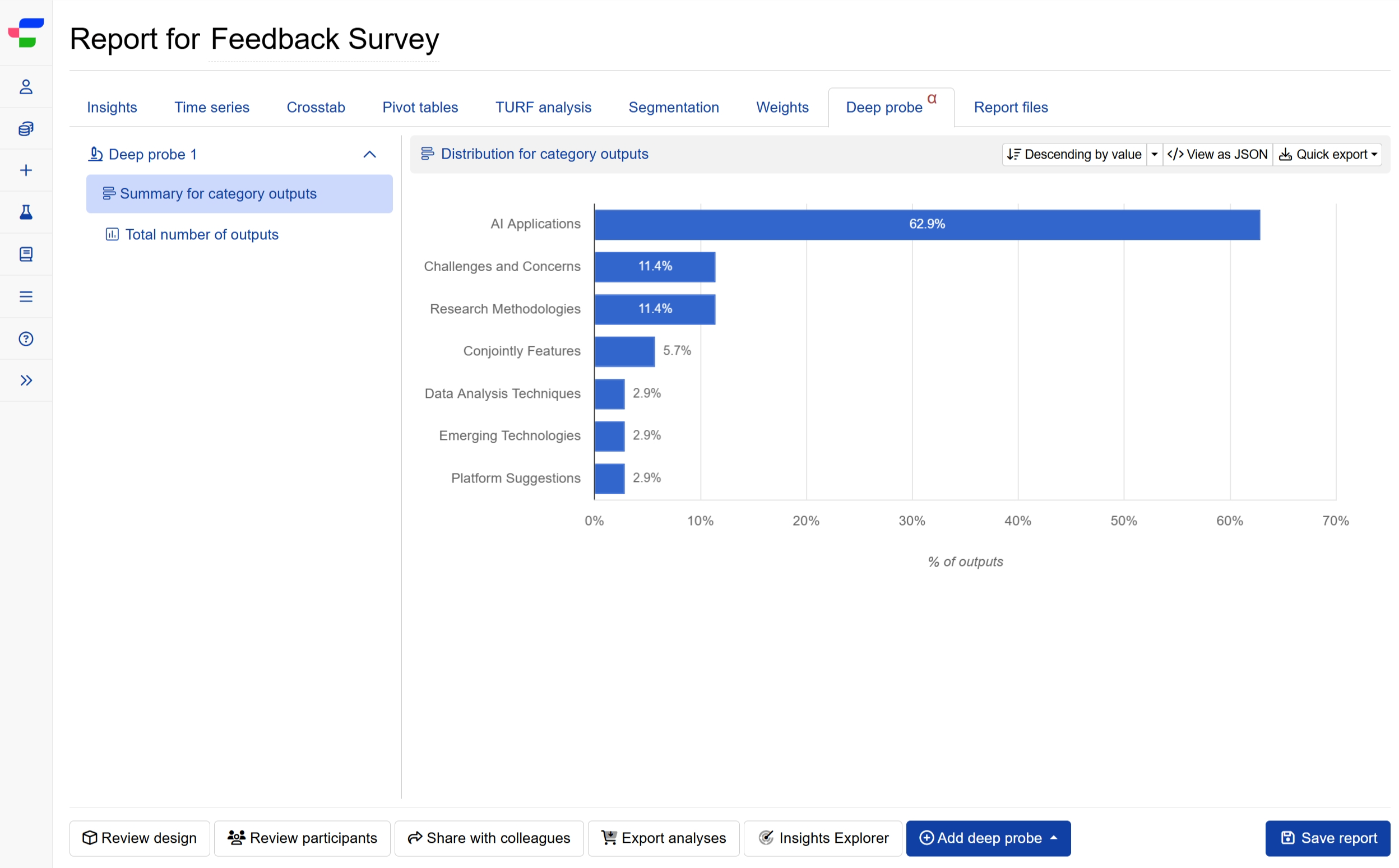

Outputs overview

In this case, the Deep probe generates a main topic category for each respondent. Please note that classification accuracy varies depending on prompt and LLM processing and cannot guarantee 100% accuracy. Conjointly recommends reviewing outputs and editing individual results where needed.

Based on these individual results, the Summary for category outputs tab presents the distribution chart of the categories. Nearly two-third of respondents mentioned AI applications in their feedback. Challenges and concerns and research methodologies each featured in approximately one in nine responses (11.4%). The remaining categories showed lower frequency.

Best practices for open-end coding

- Create comprehensive categories that cover most expected responses while keeping an “Other” option for outliers.

- Use clear category definitions that distinguish between similar concepts to ensure accurate classification.

- Test iteratively by previewing results and refining categories based on sample response patterns.

- Maintain coding consistency by using specific criteria rather than subjective judgements in category descriptions.

Other Deep probe use cases

You can also read about: